Blog

With so much buzz about AI at present, we invite blog post contributions which demystify key topics.

And look out for our news updates!

By Amber Roguski. This is the second post in a two-part blog series. It explores the under-representation of Minority Ethnic individuals as participants in biomedical research. This article explores racial bias and exclusion within biomedical research. White People are 87% more likely to be included in medical research than people from a Minority Ethnic Background, […]

Read More

As banks invest in AI solutions, they must also explore how AI bias impacts customers and understand the right and wrong ways to approach it. AI systems could unfairly decline new bank account applications, block payments and credit cards, deny loans, and other vital financial services and products to qualified customers because of how their […]

Read More

Technology has never been colourblind. It’s time to abolish notions of “universal” users of software. This is an overview on racial justice in tech and in AI that considers how systemic change must happen for technology to be support equity.

Read More

Artificial intelligence (AI) is making rapid inroads into many aspects of our financial lives. Algorithms are being deployed to identify fraud, make trading decisions, recommend banking products, and evaluate loan applications. This is helping to reduce the costs of financial products and improve th… Through a case study of mortgage applications, this article shows how […]

Read More

Diverse workforce essential to combat new danger of ‘bias in, bias out’ This short article looks at the link between the lack of diversity in the AI workforce and the bias against ethnic minorities within financial services – the “new danger of ‘bias in, bias out’”.

Read More

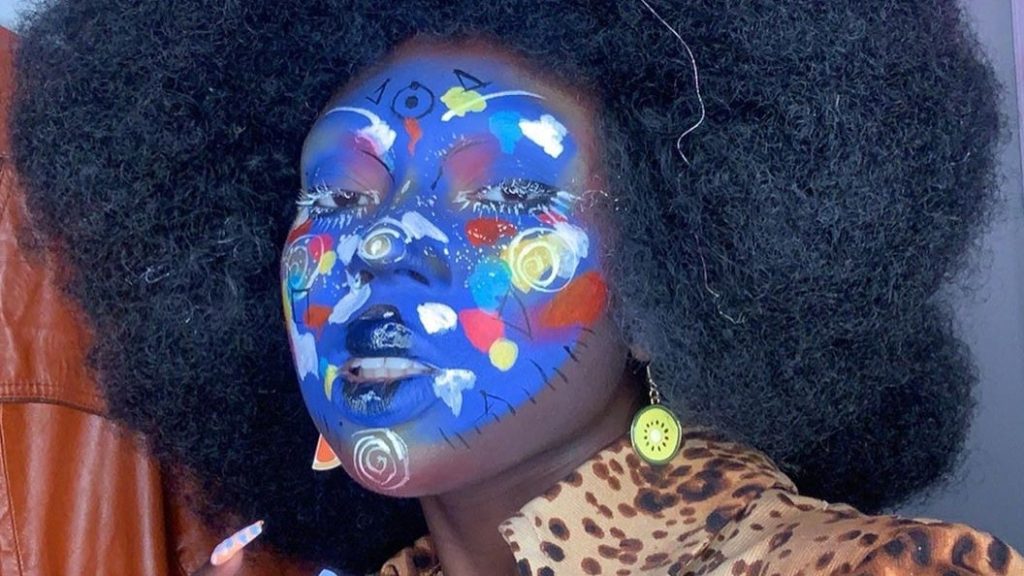

As protests against police brutality and in support of the Black Lives Matter movement continue in the wake of George Floyd’s killing, protection against mass surveillance has become top of mind. This article explains how make-up can be used both as a way to evade facial recognition systems, but also as an art form.

Read More

There’s software used across the country to predict future criminals. And it’s biased against blacks. This is an article detailing a software which is used to predict the likelihood of recurring criminality. It uses case studies to demonstrate the racial bias prevalent in the software used to predict the ‘risk’ of further crimes. Even for […]

Read More

A New Jersey man was accused of shoplifting and trying to hit an officer with a car. He is the third known black man to be wrongfully arrested based on face recognition.

Read More

Much of the software now revolutionising the financial services industry depends on algorithms that apply artificial intelligence (AI) – and increasingly, machine learning – to automate everything from simple, rote tasks to activities requiring sophisticated judgment. Explains (from a US perspective) how the development of machine learning and algorithms has left financial services at risk […]

Read More

AI was supposed to be the pinnacle of technological achievement — a chance to sit back and let the robots do the work. While it’s true AI completes complex tasks and calculations faster and more accurately than any human could, it’s shaping up to need some supervision. There is data which predicts that the introduction […]

Read More

Timnit Gebru and Google Timnit Gebru is one of the most high-profile Black women in her field and a powerful voice in the new field of ethical AI, which seeks to identify issues around bias, fairness, and responsibility. Google hired her, then fired her. This article argues that leading AI ethics researchers, such as Timnit […]

Read More

HireVue claims it uses artificial intelligence to decide who’s best for a job. Outside experts call it “profoundly disturbing.” An article about HireVue’s “AI-driven assessments”. More than 100 employers now use the system, including Hilton and Unilever, and more than a million job seekers have been analysed.

Read More