Blog

With so much buzz about AI at present, we invite blog post contributions which demystify key topics.

And look out for our news updates!

Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification – MIT Media Lab Recent studies demonstrate that machine learning algorithms can discriminate based on classes like race and gender. In this work, we present an approach to e… ccording to this paper researchers from MIT and Stanford University, three commercially released facial-analysis programs from major […]

Read More

Resume screening is the first hurdle applicants typically face when they apply for a job. Despite the many empirical studies showing bias at the resume‐screening stage, fairness at this funnelling st… CVs are worldwide one of the most frequently used screening tools. CV screening is also the first hurdle applicants typically face when they apply […]

Read More

A study on the discriminatory impact of algorithms in pre-trial bail decisions.

Read More

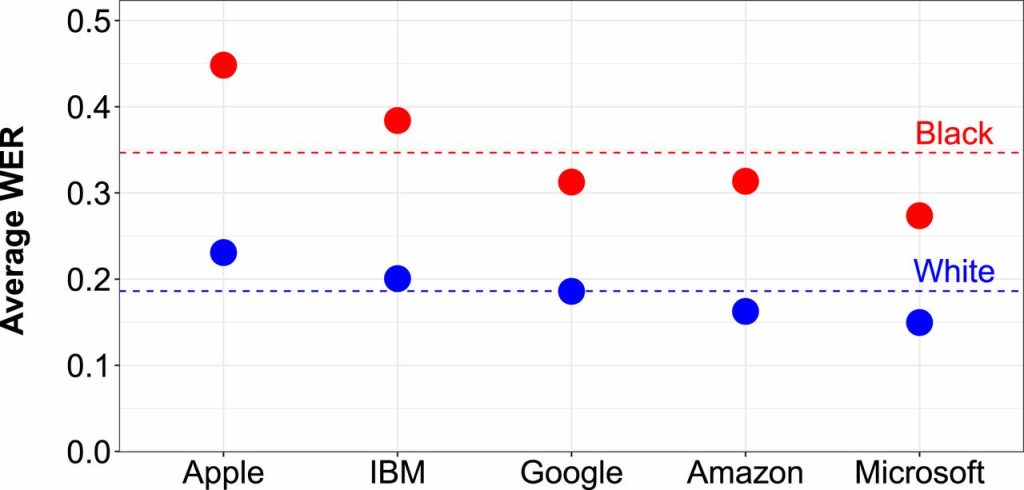

Racial disparities in automated speech recognition Automated speech recognition (ASR) systems are now used in a variety of applications to convert spoken language to text, from virtual assistants, to closed captioning, to hands-free computing. By analyzing a large corpus of sociolinguistic interviews with white and African American speakers, we demo… Analysis of five state-of-the-art automated […]

Read More

The present experiment examined how people adjust their judgment after they learn that crucial information on which their initial evaluation was based is incorrect. In line with our expectations, the results showed that people generally do adjust their attitudes, but the degree to which they correct their assessment depends on their cognitive ability. A study […]

Read More

Research paper about responsible AI Toxic and abusive language threaten the integrity of public dialogue and democracy. In response, governments worldwide have enacted strong laws against abusive language that leads to hatred, violence and criminal offences against a particular group. The responsible (i.e. effective, fair and unbiased) moderation of abusive language carries significant challenges. Our […]

Read MoreA research paper about race and AI chatbots Why is it so hard for AI chatbots to talk about race? By researching databases, natural language processing, and machine learning in conjunction with critical, intersectional theories, we investigate the technical and theoretical constructs underpinning the problem space of race and chatbots. This paper questions how to […]

Read MoreA paper on racial bias in hate speech Technologies for abusive language detection are being developed and applied with little consideration of their potential biases. We examine racial bias in five different sets of Twitter data annotated for hate speech and abusive language. Tweets written in African-American English are far more likely to be automatically […]

Read MoreRisk of racial bias in hate speech detection This research paper investigates how insensitivity to differences in dialect can lead to racial bias in automatic hate speech detection models, potentially amplifying harm against minority populations.

Read More

The enormous financial success of online advertising platforms is partially due to the precise targeting features they offer. Although researchers and journalists have found many ways that advertisers can target – or exclude – particular groups of users seeing their ads, comparatively little attenti… Ad-delivery is controlled by the advertising platform (eg. Facebook) and researchers […]

Read More

Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification Recent studies demonstrate that machine learning algorithms can discriminate based on classes like race and gender. In this work, we present an approach to evaluate bias present in automated facial… Commercial AI facial recognition systems tend to misclassify darker-skinned females more than any other group (lighter-skinned […]

Read More