AI in Policing

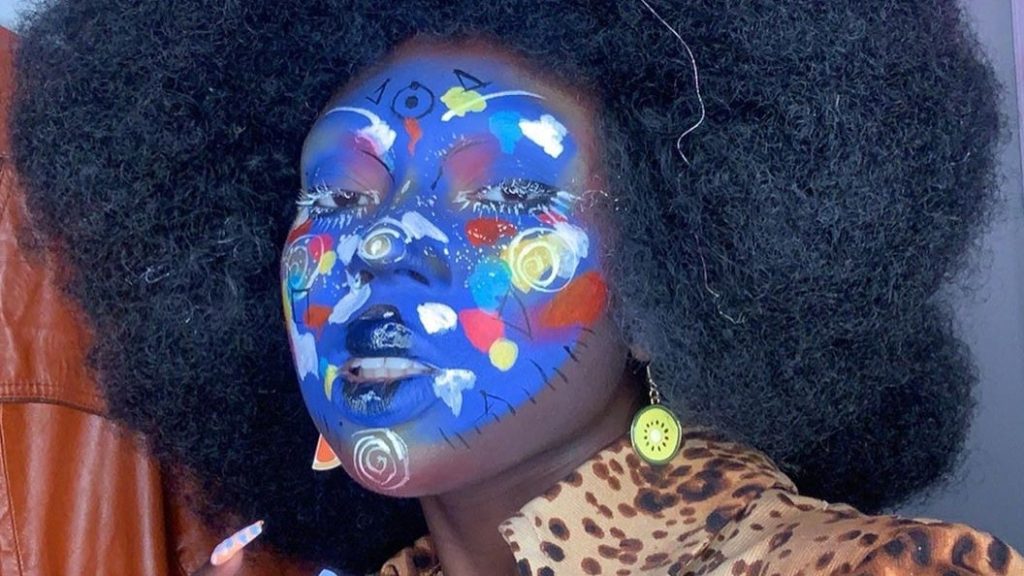

AI tools are developed with the aim of preventing crime with tools such as computer vision, pattern recognition, and the use of historical data to create crime maps, locations with higher risks of offence. Whilst they may reduce on-the-fly human bias, they may automate systemic biases. For example, facial recognition techniques are less reliable for non-white individuals, specially for black women.

Historical data may reflect the over-policing certain locations whilst under-policing others. Those patterns get encoded in the algorithms, which reinforce the over- and under-policing of the same areas in the future. The abundance of data can also make postcode a proxy for ethnicity.