Digital and Social Media

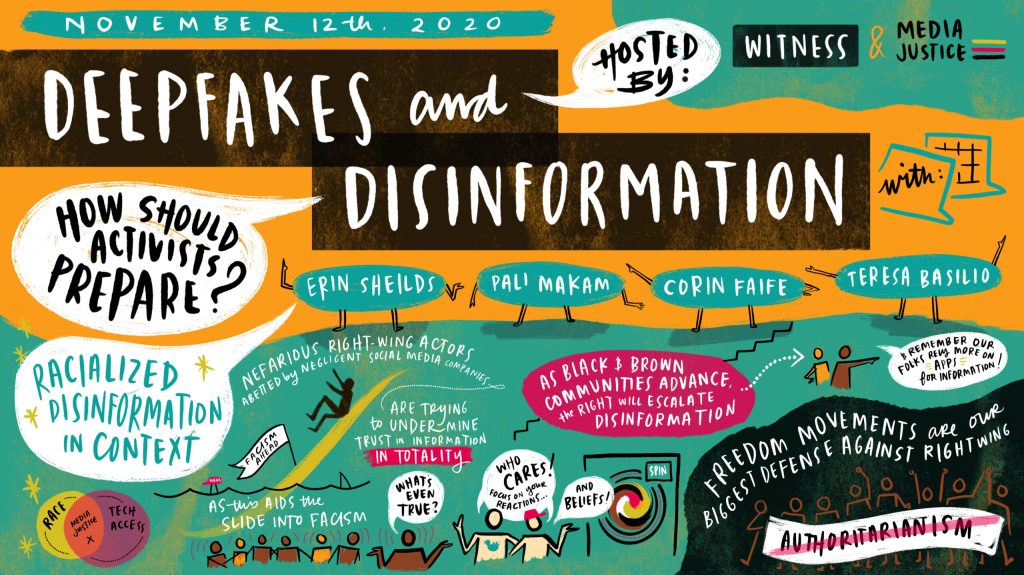

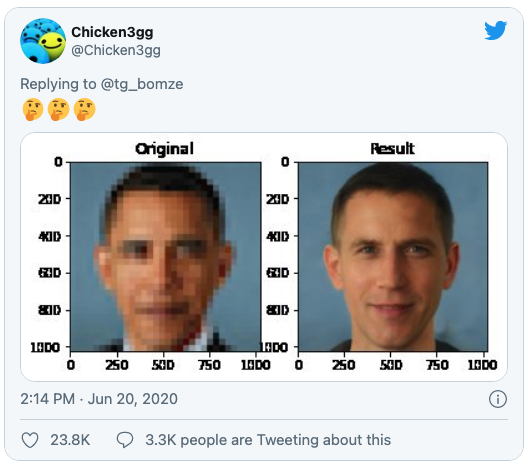

Many online platforms, like social media websites, news websites and entertainment websites, use AI algorithms to automatically edit, curate, promote and even create content. Many of these algorithms are trained on data which may be biased in some way: it might not be diverse enough to represent the true cultural and ethnic diversity of society, or it could be consciously or unconsciously designed based on stereotyped or politically and socially biased ideas.

The AI algorithms then reflect those biases when performing their task, amplifying social prejudice across the digital and social media platforms that people use everyday. When a biased AI tool is choosing what articles people see in their newsfeed, or editing an image automatically to fit a certain format, it means people are only seeing a biased view of the world.