Blog

With so much buzz about AI at present, we invite blog post contributions which demystify key topics.

And look out for our news updates!

Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification – MIT Media Lab Recent studies demonstrate that machine learning algorithms can discriminate based on classes like race and gender. In this work, we present an approach to e… ccording to this paper researchers from MIT and Stanford University, three commercially released facial-analysis programs from major […]

Read More

Resume screening is the first hurdle applicants typically face when they apply for a job. Despite the many empirical studies showing bias at the resume‐screening stage, fairness at this funnelling st… CVs are worldwide one of the most frequently used screening tools. CV screening is also the first hurdle applicants typically face when they apply […]

Read More

High definition research into employee attitudes Comprehensive report on worker sentiment about the general outlook for the workplace and the long-term prospects for the jobs they’re holding. High levels of anxiety in workers due to automation.

Read More

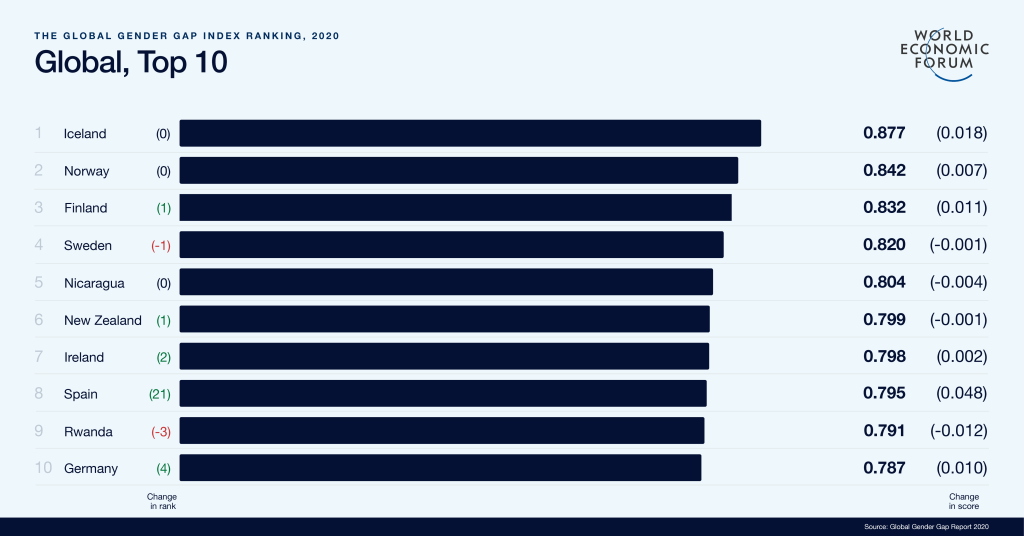

After years of growing income inequality, concerns about technology-driven displacement of jobs, and rising societal discord globally, the combined health and economic shocks of 2020 have put economies into freefall, disrupted labour markets and fully revealed the inadequacies of our social contract… Comprehensive report on the future of work, increase of automation/digitalisation of tasks and […]

Read More

This white paper takes a deeper dive into the data and algorithm used to underestimate the pass rate of students of certain nationalities, looking at how data and modelling can lead to bias.

Read MoreA case study from ResearchGate We present a project that aims to generate images that depict accurate, vivid, and personalized outcomes of climate change using Cycle-Consistent Adversarial Networks (CycleGANs). Case Study: Explores the potential of using images from a simulated 3D environment to improve a domain adaptation task carried out by the MUNIT architecture, aiming […]

Read More

A study on the discriminatory impact of algorithms in pre-trial bail decisions.

Read More

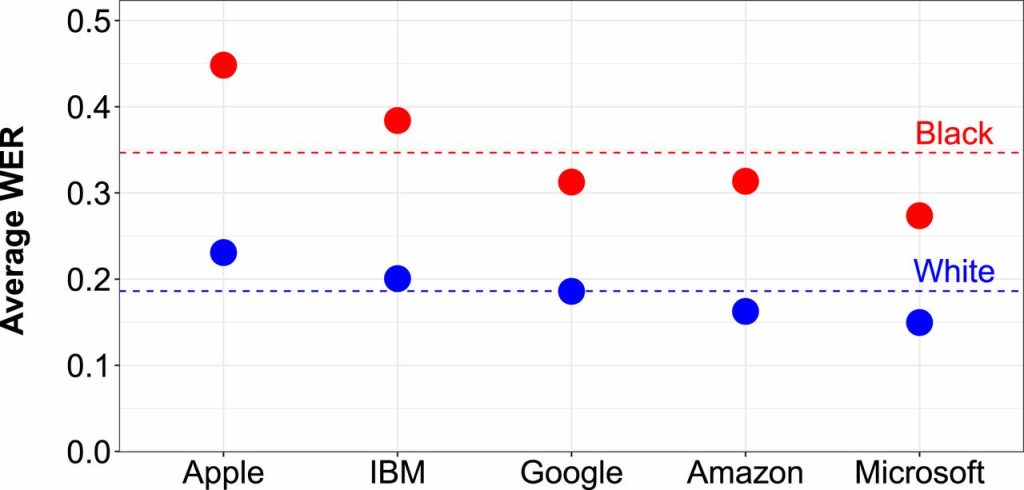

Racial disparities in automated speech recognition Automated speech recognition (ASR) systems are now used in a variety of applications to convert spoken language to text, from virtual assistants, to closed captioning, to hands-free computing. By analyzing a large corpus of sociolinguistic interviews with white and African American speakers, we demo… Analysis of five state-of-the-art automated […]

Read MoreFacial recognition is not the next generation of CCTV. Whilst CCTV takes pictures, facial recognition takes measurements. Measurements of the distance between your eyes, the length of your nose, the shape of your face. In this sense, facial recognition is the next generation of fingerprinting. It is a highly intrusive form of surveillance which everyone […]

Read MoreA research paper about race and AI chatbots Why is it so hard for AI chatbots to talk about race? By researching databases, natural language processing, and machine learning in conjunction with critical, intersectional theories, we investigate the technical and theoretical constructs underpinning the problem space of race and chatbots. This paper questions how to […]

Read MoreA paper on racial bias in hate speech Technologies for abusive language detection are being developed and applied with little consideration of their potential biases. We examine racial bias in five different sets of Twitter data annotated for hate speech and abusive language. Tweets written in African-American English are far more likely to be automatically […]

Read MoreRisk of racial bias in hate speech detection This research paper investigates how insensitivity to differences in dialect can lead to racial bias in automatic hate speech detection models, potentially amplifying harm against minority populations.

Read More