Blog

With so much buzz about AI at present, we invite blog post contributions which demystify key topics.

And look out for our news updates!

Opinion: By Tess Buckley My Grandpa (or, as I called him, Boppy) used to read the paper every morning. I remember eating my eggs in silence with him and squinting, trying my best to catch whatever was on the other side of his reading. He shook the papers before flipping to the next side as […]

Read More

A new article in the Journal of the American Medical Informatics Association points to the dissemination of “under-developed and potentially biased models” in response to the novel coronavirus. This article draws on recent medical research which shows how potentially biased models informing our health care systems have impacted COVID-19. These biased models could exacerbate the […]

Read More

Earlier this week, Google announced the arrival of a new AI app to help diagnose skin conditions. It plans to launch it in Europe later this year. This article discusses mobile apps that aid the self-diagnosis of skin conditions. The apps do intend to be inclusive of all skin types, however, the training data was […]

Read More

Medical devices employing AI stand to benefit everyone in society, but if left unchecked, the technologies could unintentionally perpetuate sex, gender and race biases. Medical devices utilising AI technologies stand to reduce general biases in the health care system, however, if left unchecked, the technologies could unintentionally perpetuate sex, gender, and race biases. The AI […]

Read More

Sweeping calculation suggests it could be — but how to fix the problem is unclear. An estimated one million black adults would be transferred earlier for kidney disease if US health systems removed a ‘race-based correction factor’ from an algorithm they use to diagnose people and decide whether to administer medication. There is a debate […]

Read More

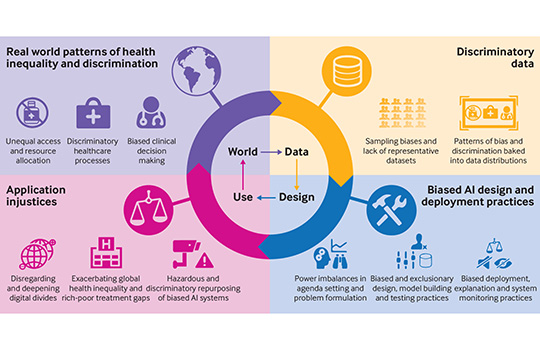

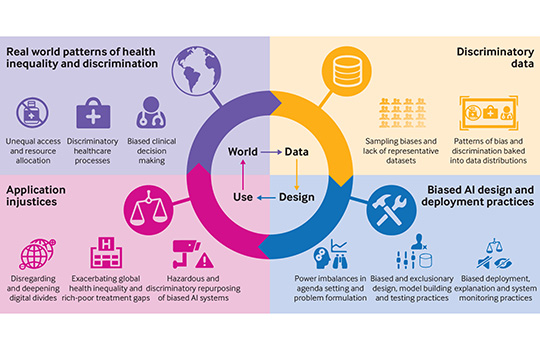

Artificial intelligence can help tackle the covid-19 pandemic, but bias and discrimination in its design and deployment risk exacerbating existing health inequity argue David Leslie and colleagues. Among the most damaging characteristics of the covid-19 pandemic has been its disproportionate effect… A team of medical ethics researchers are arguing that bias and discrimination within AI […]

Read More

Many devices and treatments work less well for them This article explores how the pulse oximeter, a device used to test oxygen levels in blood for coronavirus patients, exhibits racial bias. Medical journals give evidence that pulse oximeters overestimated blood-oxygen saturation more frequently in black people than white.

Read More

Pulse oximeters give biased results for people with darker skin. The consequences could be serious. COVID-19 care has brought the pulse oximeter to the home, it’s a medical device that helps to understand your oxygen saturation levels. This article examines research that shows oximetry’s racial bias. Oximeters have been calibrated, tested and developed using light-skinned […]

Read More

Technology influences the way we eat, sleep, exercise, and perform our daily routines. But what to do when we discover the technology we rely on is built on faulty methodology and… Health monitoring devices influence the way that we eat, sleep, exercise, and perform our daily routines. But what do we do when we discover […]

Read More

Many popular wearable heart rate trackers rely on technology that could be less accurate for consumers who have darker skin, researchers, engineers and other experts told STAT. An estimated 40 million people in the US alone have smartwatches or fitness trackers that can monitor heartbeats. However, some people of colour may be at risk of […]

Read More

New research shows that AI models designed for health care settings can exhibit bias against certain ethnic and gender groups. Machine learning models for healthcare hold promise in improving medical treatments by improving predictions of care and mortality, however their black box nature, and bias in training data sets leaves them vulnerable to instead hinder […]

Read More

Artificial intelligence can help tackle the covid-19 pandemic, but bias and discrimination in its design and deployment risk exacerbating existing health inequity argue David Leslie and colleagues Among the most damaging characteristics of the covid-19 pandemic has been its disproportionate effect… A team of medical ethics researchers are arguing that bias and discrimination within AI […]

Read More