Filter resources by type or complexity

All AdvancedArticleBeginnerCase StudyIntermediatePodcastProjectReportResearch PaperVideoWhite Paper

Millions of black people affected by racial bias in healthcare algorithms

Study reveals rampant racism in decision-making software used by US hospitals — and highlights ways to correct it. An algorithm widely used in US hospitals to allocate health care to patients has been systematically discriminating against black people, a sweeping analysis has found. The study concluded that the algorithm was less likely to refer black […]

Read More

The Health Pulse: AI Bias in Healthcare

Show Analytics Exchange: Podcasts from SAS, Ep The Health Pulse: AI and Bias in Healthcare – Mar 5, 2021 Data scientist Hiwot Tesfaye joins Greg for a conversation about the use of algorithms in healthcare and how models can introduce bias. They’ll discuss current examples of health care bias, who should be held responsible and […]

Read More

Bias and Discrimination in Healthcare AI Models

AI is helping healthcare organisations determine care management programs and treatment plans – who gets what care – but these models and algorithms can be biased and introduce discrimination in the allocation or denial of care.

Read More

Understanding Racial Bias in Medical AI Training Data

By Adriana Krasniansky Interest in artificially intelligent (AI) health care has grown at an astounding pace: the global AI health care market is expected to reach $17.8 billion by 2025 and AI-powered systems are being designed to support medical activities ranging from patient diagnosis and triagin… AI-powered systems are being designed to support medical activities […]

Read More

AI Bias May Worsen COVID-19 Health Disparities for People of Colour

A new article in the Journal of the American Medical Informatics Association points to the dissemination of “under-developed and potentially biased models” in response to the novel coronavirus. This article draws on recent medical research which shows how potentially biased models informing our health care systems have impacted COVID-19. These biased models could exacerbate the […]

Read More

Google Announces New AI App To Diagnose Skin Condititons

Earlier this week, Google announced the arrival of a new AI app to help diagnose skin conditions. It plans to launch it in Europe later this year. This article discusses mobile apps that aid the self-diagnosis of skin conditions. The apps do intend to be inclusive of all skin types, however, the training data was […]

Read More

Understanding Racial Bias in Medical AI Training Data

By Adriana Krasniansky Interest in artificially intelligent (AI) health care has grown at an astounding pace: the global AI health care market is expected to reach $17.8 billion by 2025 and AI-powered systems are being designed to support medical activities ranging from patient diagnosis and… AI-powered systems are being designed to support medical activities ranging […]

Read More

How medicine discriminates against non-white people and women

Many devices and treatments work less well for them This article explores how the pulse oximeter, a device used to test oxygen levels in blood for coronavirus patients, exhibits racial bias. Medical journals give evidence that pulse oximeters overestimated blood-oxygen saturation more frequently in black people than white.

Read More

Fitbits and other wearables may not accurately track heart rates in people of colour

Many popular wearable heart rate trackers rely on technology that could be less accurate for consumers who have darker skin, researchers, engineers and other experts told STAT. An estimated 40 million people in the US alone have smartwatches or fitness trackers that can monitor heartbeats. However, some people of colour may be at risk of […]

Read More

Studies find bias in AI models that recommend treatments and diagnose diseases

New research shows that AI models designed for health care settings can exhibit bias against certain ethnic and gender groups. Machine learning models for healthcare hold promise in improving medical treatments by improving predictions of care and mortality, however their black box nature, and bias in training data sets leaves them vulnerable to instead hinder […]

Read More

Bias + Artificial Intelligence (in Medicine)

Talk by Rachel Thomas on the prevalence of bias within AI-based technology used in medicine. AI has the potential to remove human biases in the healthcare system, however its integration within medicine could also amplify the existing biases.

Read More

Racial Bias in Science and Medicine: Who’s Included?

A short video examining the lack of inclusion within clinical biomedical research, and the consequence this has on the effectiveness of the treatments and medicines for non-white patients. Lack of research on minority patients means that we do not understand the racial differences in drug response, and so approved medical treatments are excluding a huge […]

Read More

‘Objective’ Science and White Bias: BAME Under-Representation in Biomedical Research (Part 2)

By Amber Roguski. This is the second post in a two-part blog series. It explores the under-representation of Minority Ethnic individuals as participants in biomedical research. This article explores racial bias and exclusion within biomedical research. White People are 87% more likely to be included in medical research than people from a Minority Ethnic Background, […]

Read More

Biases are being baked into artificial intelligence

When it comes to decision making, it might seem that computers are less biased than humans. But algorithms can be just as biased as the people who create the… Quick, concise Axios video that describes algorithmic bias, how and why human bias ends up in systems used for hiring and criminal justice among other things.

Read More

Racist Robots? How AI bias may put financial firms at risk

Artificial intelligence (AI) is making rapid inroads into many aspects of our financial lives. Algorithms are being deployed to identify fraud, make trading decisions, recommend banking products, and evaluate loan applications. This is helping to reduce the costs of financial products and improve th… Through a case study of mortgage applications, this article shows how […]

Read More

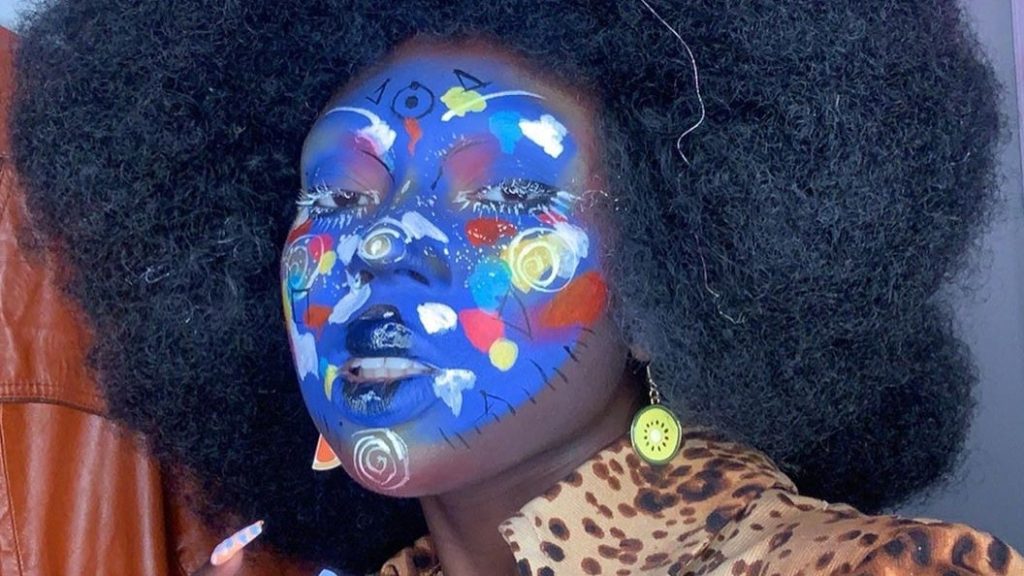

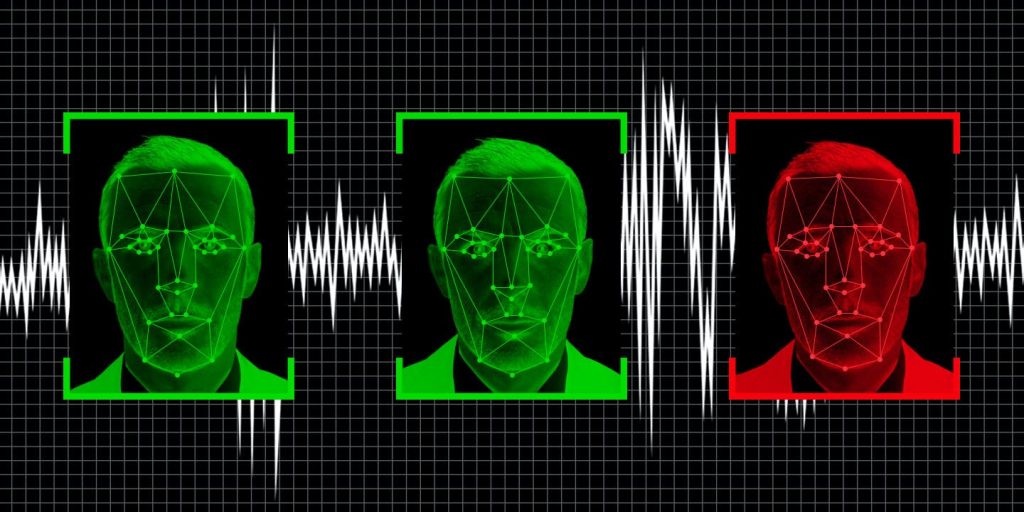

Can make-up be an anti-surveillance tool?

As protests against police brutality and in support of the Black Lives Matter movement continue in the wake of George Floyd’s killing, protection against mass surveillance has become top of mind. This article explains how make-up can be used both as a way to evade facial recognition systems, but also as an art form.

Read More

Another arrest, and jail time, due to a bad facial recognition match

A New Jersey man was accused of shoplifting and trying to hit an officer with a car. He is the third known black man to be wrongfully arrested based on face recognition.

Read More

AI Perpetuating Human Bias in the Lending Space

AI was supposed to be the pinnacle of technological achievement — a chance to sit back and let the robots do the work. While it’s true AI completes complex tasks and calculations faster and more accurately than any human could, it’s shaping up to need some supervision. There is data which predicts that the introduction […]

Read More

Google hired Timnit Gebru to be an outspoken critic of unethical AI. Then she was fired for it.

Timnit Gebru and Google Timnit Gebru is one of the most high-profile Black women in her field and a powerful voice in the new field of ethical AI, which seeks to identify issues around bias, fairness, and responsibility. Google hired her, then fired her. This article argues that leading AI ethics researchers, such as Timnit […]

Read More

Amazon scraps AI recruiting tool showing bias against women

In 2018, Amazon’s use of AI for hiring was discovered to favour male job candidates, because its algorithms had been trained on 10 years’ worth of internal data that heavily skewed male. The algorithm was trained, in effect, to believe that male candidates were better than female candidates.

Read More

This tech startup uses AI to eliminate all hiring biases

This video argues that hiring is largely analogue and broken. This leads to major problems such as inefficiency, ineffectiveness (50% of first-year hires fail), poor candidate experience, and lack of diversity. The hiring process is plagued by gender bias, age bias, socioeconomic bias, and racial bias. Pymetrics intentionally audits algorithms to weed out unconscious human […]

Read More

PwC facial recognition tool criticised for home working privacy invasion

Accounting giant PwC has come under fire for the development of a facial recognition tool that logs when employees are absent from their computer screens while they work from home. The technology, which is being developed specifically for financial institutions, recognises the faces of workers via t… PwC has come under fire for the development […]

Read More

AI will impact future of jobs

Will AI eliminate more jobs than it creates? Experts weigh in on a hot topic that impacts almost every industry. The impact of AI on future jobs.

Read More

An AI expert told ’60 Minutes’ that AI could replace 40% of jobs

Artificial intelligence can replace repetitive tasks, but it doesn’t have the empathy to lead. View of an AI expert on human job loss. Provides an anecdotal view from an AI expert on what jobs are already being displaced with AI and automation.

Read More

The Misinformation Edition of the Glass Room

The Misinformation Edition of the Glass Room is an online version of a physical exhibition that explores different types of misinformation, teaches people how to recognise it and combat its spread.

Read More

Remote testing monitored by AI is failing the students forced to undergo it

An opinion piece in which examples are given of students who have been highly disadvantaged by exam software, including a muslim woman forced to remove her hijab by software, in order to prove she is not hiding anything behind it.

Read More

Exams that use facial recognition may be ‘fair’ – but they’re also intrusive

News article which argues that whilst AI facial recognition during exams might be fair, it is both an invasion of privacy and is at risk of bringing unwarranted biases.

Read More

In Hong Kong, this AI Reads children’s emotions as they learn…

Facial recognition AI, combined with other AI assessment, is used to spot how children are performing and boost their performance. However, there is concern that it may not work so well for students with non-Chinese ethnicities who were not part of the training data.

Read More

Inbuilt biases and the problem of algorithms

This article details the algorithm used to inform A Level results for students who could not take exams due to the 2020 pandemic. The algorithm took into account the postcode of the student, which meant that students from lower income areas were more likely to have their grade reduced whilst students in high-income areas were […]

Read More

Algorithms can drive inequality. Just look at Britain’s school exam chaos

An outcry over alleged algorithmic bias against pupils from more disadvantaged backgrounds has now left teenagers and experts alike calling for greater scrutiny of the technology.

Read More

Postcode or performance: How the A Level results of 2020 exposed a broken system

Case study explaining algorithm bias inherent in grade prediction for A Level students. Demonstrates the physical impact AI can have, if not scrutinised for bias.

Read More

The problem with algorithms: magnifying misbehaviour

This news example gives an example of bias present in an algorithm governing the first round of admissions into a medical university. The data used to define the algorithms output showed bias against both females and people with non-European-looking names.

Read More

Mary Madden on Algorithmic Bias in College Admissions

A good introductory video to the use of AI in college admissions. Questioning at what point it is acceptable to completely remove the human oversight in admissions.

Read More

AI in immigration can lead to ‘serious human right breaches’

This video refers to a report from the University of Toronto’s Citizen Lab that raises concerns that the handling of private data by AI for immigration purposes could breach human rights. As AI tools are trained using datasets, before implementing those tools that target marginalized populations, we need to answer questions such as: Where does […]

Read More

How AI Could Reinforce Biases In The Criminal Justice System

Whilst some believe AI will increase police and sentencing objectivity, others fear it will exacerbate bias. For example, the over-policing of minority communities in the past has generated a disproportionate number of crimes in some areas, which are passed to algorithms, which in turn reinforce over-policing.

Read More

Coded Bias: When the Bots are Racist – new documentary film

This film cuts across all areas of potential racial bias in AI in an engaging documentary film format.

Read More

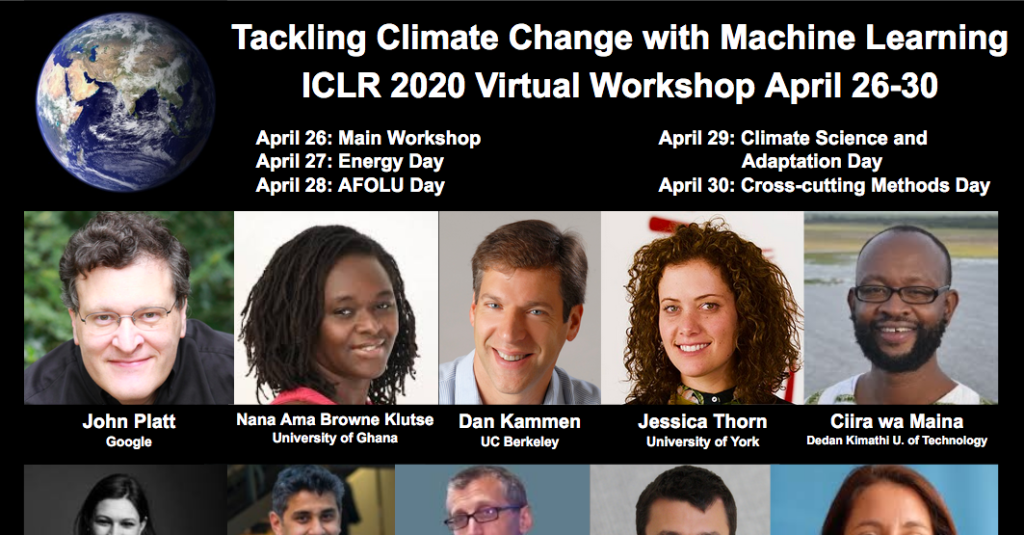

Visualise Climate Change – interactive website

Our project aims to raise awareness and conceptual understanding of climate change by bringing the future closer. Conceptual interactive website to show precise and personalised impacts of climate change using AI and climate modelling. Bringing together researchers from different fields, the website aims to act as an educational tool that will produce accurate and vivid […]

Read More

Why the Climate Change AI Community Should Care About Weather: A New Approach for Africa

Case Study: Community perspective of the game-changing socio-economic value that could be achieved with better forecasts, especially among vulnerable communities. The paper presents a new way to view this opportunity by better understanding the problem, with the goal of inspiring the Climate Change AI community to contribute to this important aspect of the climate adaptation […]

Read More

Using Satellite Images and Artificial Intelligence to Improve Agricultural Resilience

Case Study: Most of Rwanda’s crop production comes from smallholder farms. The country’s agriculture officials have historically had insufficient data on where crops are cultivated or how much yield to expect — a hindrance for government’s future planning. Building on previous work with emerging technologies, machine learning, economics, and agriculture, the paper develops a new […]

Read More

We tested Europe’s new lie detector for travellers – and immediately triggered a false positive

4.5 million euros have been pumped into the virtual policeman project meant to judge the honesty of travelers. An expert calls the technology “not credible.” IBorderCtrl’s lie detection system was developed in England by researchers at Manchester Metropolitan University. It claims that its virtual cop can detect deception by picking on the micro gestures the […]

Read More

Artificial intelligence in the courtroom

The impact of AI on litigation. The current use of AI in reviewing documents, predicting outcome of cases and predicting success rates for lawyers. This article highlights concerns about fallibility and the need of human oversight.

Read More

How AI is impacting the UK’s legal sector

We examine the impact of artificial intelligence on the UK’s legal sector

Read More

Six ways the legal sector is using AI right now

Law Society partner and equity crowdfunding platfrom Seedrs explains how developments within AI are taking law firms and solicitors to the next level. A article on how AI can be used in adjudication and law in general. It highlights that although AI has vast potential, there is not a broad adoption so far.

Read More

Facial recognition could stop terrorists before they act

In their zeal and earnest desire to protect individual privacy, policymakers run the risk of stifling innovation. The author makes the case that using facial recognition to prevent terrorism is justified as our world is becoming more dangerous every day; hence, policymakers should err on the side of public safety.

Read More

Is police use of face recognition now illegal in the UK?)

The UK Court of Appeal has determined that the use of a face-recognition system by South Wales Police was “unlawful”, which could have ramifications for the widespread use of such technology across the UK. The UK Court of Appeal unanimously decided against a face-recognition system used by South Wales Police.

Read More

UK police adopting facial recognition, predictive policing without public consultation

UK police forces are largely adopting AI technologies, in particular facial recognition and predictive policing, without public consultation. This article alerts about UK police using facial recognition and predictive policing without conducting public consultations. It also calls for transparency and input from the public about how those technologies are being used.

Read More

The algorithms that detect hate speech online are biased against black people

The algorithms that detect hate speech online are biased against black people A new study shows that leading AI models are 1.5 times more likely to flag tweets written by African Americans as “offensive” compared to other tweets.

Read More