Filter resources by type or complexity

All AdvancedArticleBeginnerCase StudyIntermediatePodcastProjectReportResearch PaperVideoWhite Paper

AI and Bias in Healthcare

This guest panel series examines the use of AI in assisting healthcare, with a particular focus on automating tasks, communicating diagnoses and allocating resources. It examines the sources of bias in AI integrated systems and what we can do to eliminate it.

Read More

Bias at warp speed: how AI may contribute to the disparities gap in the time of COVID-19

Abstract. The COVID-19 pandemic is presenting a disproportionate impact on minorities in terms of infection rate, hospitalizations, and mortality. In terms of infection rate, hospitalisation and mortality, the Covid-19 pandemic presents a disproportionate impact on minorities. Many believe that artificial intelligence could be a solution to guide clinical decision making to overcome this novel disease. […]

Read More

The Potential for AI in healthcare

The complexity and rise of data in healthcare means that artificial intelligence (AI) will increasingly be applied within the field. Several types of AI are already being employed by payers and providers of care, and life sciences companies. The key categories … This report discusses current applications of AI as well as potential future applications […]

Read More

Addressing Bias: Artificial Intelligence in Cardiovascular Medicine

Artificial intelligence (AI) is providing opportunities to transform cardiovascular medicine. As the leading cause of morbidity and mortality worldwide, cardiovascular disease is prevalent across all populations, with clear benefit to operationalise clinical and biomedical data to improve workflow… Medical paper which examines the potential of Artificial Intelligence in cardiovascular medicine; it could hugely benefit patient […]

Read More

Debiasing artificial intelligence: Stanford researchers call for efforts to ensure that AI technologies do not exacerbate health care disparities

Medical devices employing AI stand to benefit everyone in society, but if left unchecked, the technologies could unintentionally perpetuate sex, gender and race biases. Medical devices utilising AI technologies stand to reduce general biases in the health care system, however, if left unchecked, the technologies could unintentionally perpetuate sex, gender, and race biases. The AI […]

Read More

Is a racially biased algorithm delaying healthcare for one million black people?

Sweeping calculation suggests it could be — but how to fix the problem is unclear. An estimated one million black adults would be transferred earlier for kidney disease if US health systems removed a ‘race-based correction factor’ from an algorithm they use to diagnose people and decide whether to administer medication. There is a debate […]

Read More

If AI is going to be the world’s doctor, it needs better textbooks

Artificial intelligence in healthcare currently reflects the same racial and gender biases as the culture at large. Those prejudices are built into the data. AI technologies are being used to diagnose Alzheimer’s disease by assessing speech. This technology could aid early diagnosis of Alzheimer’s. However, it’s evident that the algorithms behind this technology are trained […]

Read More

Can we trust AI not to further embed racial bias and prejudice?

Heralded as an easy fix for health services under pressure, data technology is marching ahead unchecked. But is there a risk it could compound inequalities? Poppy Noor investigates. Journalist Poppy Noor investigates how black people with melanoma are being underserved in healthcare, and the link to the racist algorithms driving new cancer software. Most of […]

Read More

How a Popular Medical Device Encodes Racial Bias

Pulse oximeters give biased results for people with darker skin. The consequences could be serious. COVID-19 care has brought the pulse oximeter to the home, it’s a medical device that helps to understand your oxygen saturation levels. This article examines research that shows oximetry’s racial bias. Oximeters have been calibrated, tested and developed using light-skinned […]

Read More

Skin Deep: Racial Bias in Wearable Tech

Technology influences the way we eat, sleep, exercise, and perform our daily routines. But what to do when we discover the technology we rely on is built on faulty methodology and… Health monitoring devices influence the way that we eat, sleep, exercise, and perform our daily routines. But what do we do when we discover […]

Read More

Artificial Intelligence in Healthcare: The Need for Ethics

The advent of AI promises to revolutionise the way we think about medicine and healthcare, but who do we hold accountable when automated procedures go awry? In this talk, Varoon focuses on the lack of affordable medicines within healthcare and the concerns over racial bias being brought into the healthcare system.

Read More

AI, Medicine, and Bias: Diversifying Your Dataset is Not Enough

Using the example of machine learning in medicine as an example, Rachel Thomas examines examples of racial bias within the AI technologies driving modern-day medicines and treatments. Rachel Thomas argues that whilst the diversity of your data set, and performance of your model across different demographic groups is important, this is only a narrow slice […]

Read More

Addressing Bias: Artificial Intelligence in Cardiovascular Medicine

As the leading cause of morbidity and mortality worldwide, cardiovascular disease is prevalent across all populations. Artificial intelligence (AI) is providing opportunities to transform cardiovascular medicine. Medical report which examines the potential of Artificial Intelligence in cardiovascular medicine; it could hugely benefit patient diagnosis and treatment of what is the leading cause of morbidity and […]

Read More

Understanding AI bias in banking

As banks invest in AI solutions, they must also explore how AI bias impacts customers and understand the right and wrong ways to approach it. AI systems could unfairly decline new bank account applications, block payments and credit cards, deny loans, and other vital financial services and products to qualified customers because of how their […]

Read More

Racial Justice: Decode the Default (2020 Internet Health Report)

Technology has never been colourblind. It’s time to abolish notions of “universal” users of software. This is an overview on racial justice in tech and in AI that considers how systemic change must happen for technology to be support equity.

Read More

AI risks replicating tech’s ethnic minority bias across business

Diverse workforce essential to combat new danger of ‘bias in, bias out’ This short article looks at the link between the lack of diversity in the AI workforce and the bias against ethnic minorities within financial services – the “new danger of ‘bias in, bias out’”.

Read More

Black Loans Matter: fighting bias for AI fairness in lending

Today in the United States, African Americans continue to suffer from financial exclusion and predatory lending practices. Meanwhile the advent of machine learning in financial services offers both promise and peril as we strive to insulate artificial intelligence from our own biases baked into the historical data we need to train our algorithms. A detailed […]

Read More

Unmasking Facial Recognition | WebRoots Democracy Festival

This video is an in depth panel discussion of the issues uncovered in the ‘Unmasking Facial Recognition’ report from WebRootsDemocracy. This report found that facial recognition technology use is likely to exacerbate racist outcomes in policing and revealed that London’s Metropolitan Police failed to carry out an Equality Impact Assessment before trialling the technology at […]

Read More

Machine Bias – There’s software used across the country to predict future criminals and it’s biased against blacks

There’s software used across the country to predict future criminals. And it’s biased against blacks. This is an article detailing a software which is used to predict the likelihood of recurring criminality. It uses case studies to demonstrate the racial bias prevalent in the software used to predict the ‘risk’ of further crimes. Even for […]

Read More

Algorithms and bias: What lenders need to know

Much of the software now revolutionising the financial services industry depends on algorithms that apply artificial intelligence (AI) – and increasingly, machine learning – to automate everything from simple, rote tasks to activities requiring sophisticated judgment. Explains (from a US perspective) how the development of machine learning and algorithms has left financial services at risk […]

Read MoreClimate Change and Social Inequality

UN Working Paper evidence base for conceptual framework of cyclic relationship between climate change and social inequality.

Read More

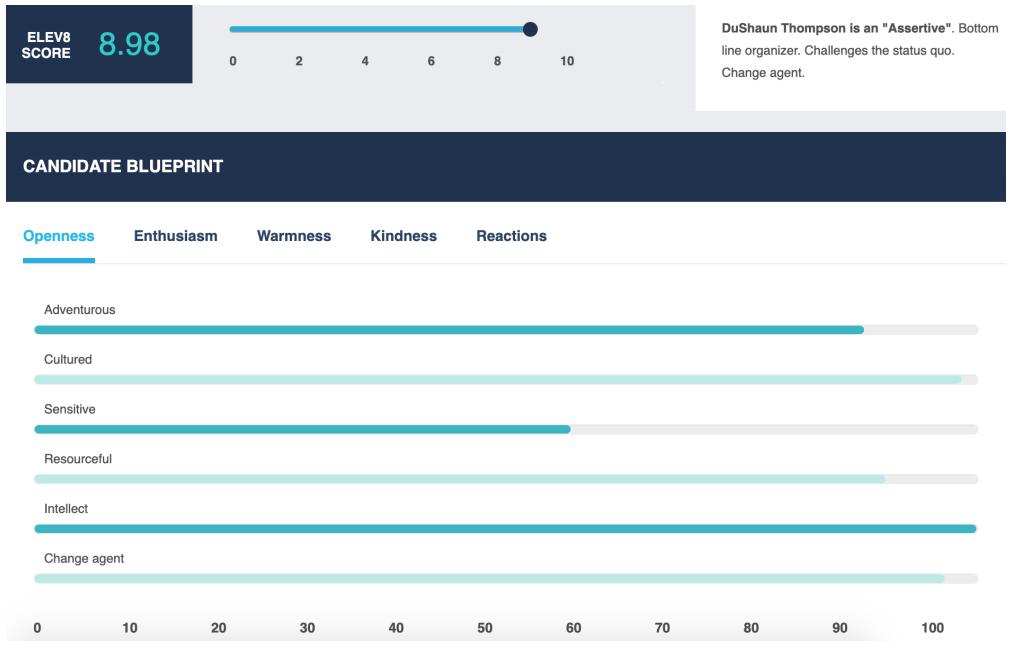

Face-scanning algorithm increasingly decides whether you deserve the job

HireVue claims it uses artificial intelligence to decide who’s best for a job. Outside experts call it “profoundly disturbing.” An article about HireVue’s “AI-driven assessments”. More than 100 employers now use the system, including Hilton and Unilever, and more than a million job seekers have been analysed.

Read More

How to use AI hiring tools to reduce bias in recruiting

From machine learning tools that optimize job descriptions, to AI-powered psychological assessments of traits like fairness, here’s a look at the strengths – and pitfalls – of AI Commercial AI recruitment systems cannot always be trusted to be effective in doing what the vendors say they do. The technical capabilities this type of software offers […]

Read More

Using AI to eliminate bias from hiring

AI could eliminate unconscious bias and sort through candidates in a fair way. Many current AI tools for recruiting have flaws, but they can be addressed. The beauty of AI is that we can design it to meet certain beneficial specifications. A movement among AI practitioners like OpenAI and the Future of Life Institute is […]

Read More

Rights group files federal complaint against AI-hiring firm HireVue, citing ‘unfair and deceptive’ practices

AI is now being used to shortlist job applicants in the UK — let’s hope it’s not racist AI-based video interviewing software such as those made by HireVue are being used by companies for the first time in job interviews in the UK to shortlist the best job applicants. HireVue’s “AI-driven assessments,” which more than […]

Read More

In the Covid-19 jobs market, biased AI is in charge of all the hiring

As millions of people flood the jobs market, companies are turning to biased and racist AI to sift through the avalanche of CVs As millions of people flood the jobs market, companies are turning to “biased and racist” AI to sift through the avalanche of CVs. This can lock certain groups out of employment and […]

Read More

These robots handle dull and dangerous work humans do today — and can create new jobs

Avidbots, iUNU and 6 River Systems are among the start-ups on CNBC’s Upstart 100 list that are putting robots to work doing “dull, dirty and dangerous” tasks previously handled by humans. This article highlights the benefits of using AI and robots in certain work situations.

Read More

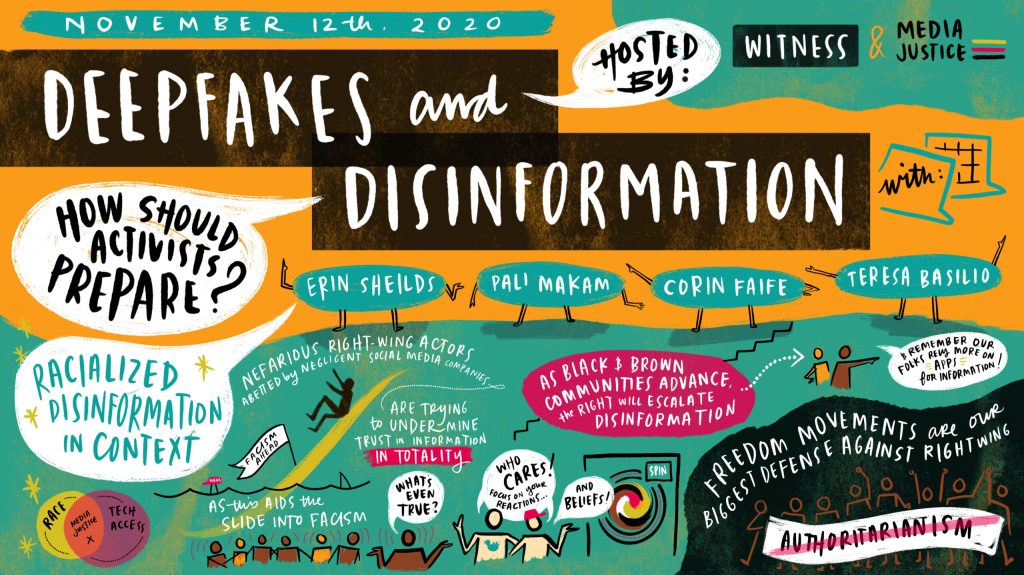

Deepfakes and Disinformation

Earlier this month, in the aftermath of a decisive yet contested election, MediaJustice, in partnership with MediaJustice Network member WITNESS, brought together nearly 30 civil society groups, researchers, journalists, and organizers to discuss the impacts visual disinformation has had on institutions and information systems. Deepfakes and disinformation can be used in used in racialised disinformation […]

Read More

Software that monitors students during tests perpetuates inequality and violates their privacy

In an opinion piece by a University Librarian, he claims that millions of algorithmically proctored (invigilated) tests are happening every month around the world, increasing exponentially during the pandemic. In his experience algorithmic ‘proctoring’ reinforces white supremacy, sexism, ableism, and transphobia, invades students’ privacy and is often a civil rights violation.

Read More

Beyond gadgets: EdTech to help close the attainment gap

A video overview of a report advocating for the use of edtech, or education technology, which includes many AI solutions, in order to close the “Opportunity Gap” between marginalised and “mainstream” pupils.

Read More

Speech recognition in education: The powers and perils

Weighing up the huge potential of voice recognition technology to gain insights into children’s language and reading development, against a difference of 16% in misidentified words between white and black voices.

Read More

AI teachers may be the solution to our education crisis

This article looks at the global shortage of teachers and how AI might be used to supplement and provide lacking education, and argues that it could be less biased than teachers, thereby resolving inequity.

Read More

Can computers ever replace the classroom?

This article considers the various ways AI can be used during the pandemic to boost virtual learning, focusing on Chinese company Squirrel AI who are reporting good results with computer tutors and personalised learning, and weighing up the risks, such as surveillance of Muslim Uighurs in Xinjiang.

Read More

AI is coming to schools, and if we are not careful, so will it’s biases

This article looks at what issues may arise for children from minority and underprivileged communities from replacing teachers with AI.

Read More

Flawed Algorithms are Grading Millions of Students Essays

Automated essay grading in the US has been shown to mark down African American students and those from other countries.

Read More

Student Predictions & Protections: Algorithm Bias in AI-Based Learning

This short article gives an example of how predictive algorithms can penalise underrepresented groups of people. In this example, students from Guam had their pass rate underestimated versus other nationalities, because of the low number of students in the data set used to build the prediction model, resulting in insufficient accuracy.

Read More

How will artificial intelligence change admissions?

An article detailing how AI might change admissions in terms of the process, the consequences and how students from some countries could be at risk of bias.

Read More

The danger of predictive algorithms in criminal justice

Dartmouth professor Dr. Hany Farid reverse engineers the inherent dangers and potential biases of recommendations engines built to mete out justice in today’s criminal justice system. In this video, he provides an example of how the number of crimes is used as proxy for race.

Read More

How Flood Mapping From Space Protects The Vulnerable And Can Save Lives

Case Study: pioneering flood mapping and response organization that uses high-cadence, high-resolution satellite imagery to build country-wide flood monitoring and dynamic analytics systems for the most vulnerable around the world.

Read More

AI and Climate Change; The Promise, the Perils and Pillars for Action

The paper provides pillars of action for the AI community, and includes a focus on climate justice where the author recommends that environmental impacts should not be externalised onto the most marginalised populations, and that the gains are not only captured by digitally mature countries in the global north. This will require centring front-line communities […]

Read More

Apprise: Using AI to unmask situations of forced labour and human trafficking

The creators of the Apprise app share how they created a system assist workers in Thailand to avoid vulnerable situations. Forced labour exploiters continually tweak and refine their own practices of exploitation, in response to changing policies and practices of inspections.The article showcases efforts to create AI tools that predict changing patterns of human exploitation. […]

Read More

AI can be sexist and racist — it’s time to make it fair

Computer scientists must identify sources of bias, de-bias training data and develop artificial-intelligence algorithms that are robust to skews in the data. The article raises the challenge of defining fairness when building databases. For example, should the data be representative of the world as it is, or of a world that many would aspire to? […]

Read More

Establishing an AI code of ethics will be harder than people think

Over the past six years, the New York City police department has compiled a massive database containing the names and personal details of at least 17,500 individuals it believes to be involved in criminal gangs. The effort has already been criticized by civil rights activists who say it is inaccurat… The New York police department has […]

Read More

Adjudicating by Algorithm, Regulating by Robot

Sophisticated computational techniques, known as machine-learning algorithms, increasingly underpin advances in business practices, from investment banking to product marketing and self-driving cars. Machine learning—the foundation of artificial intelligence—portends vast changes to the private sect… This article highlights the benefits of artificial intelligence in adjudication and making law in terms of improving accuracy, reducing human biases […]

Read More

‘Fake news’: Incorrect, but hard to correct. The role of cognitive ability on the impact of false information on social impressions

The present experiment examined how people adjust their judgment after they learn that crucial information on which their initial evaluation was based is incorrect. In line with our expectations, the results showed that people generally do adjust their attitudes, but the degree to which they correct their assessment depends on their cognitive ability. A study […]

Read More

Responsible AI for Inclusive, Democratic Societies: A cross-disciplinary approach to detecting and countering abusive language online

Research paper about responsible AI Toxic and abusive language threaten the integrity of public dialogue and democracy. In response, governments worldwide have enacted strong laws against abusive language that leads to hatred, violence and criminal offences against a particular group. The responsible (i.e. effective, fair and unbiased) moderation of abusive language carries significant challenges. Our […]

Read More

AI researchers say scientific publishers help perpetuate racist algorithms

AI researchers say scientific publishers help perpetuate racist algorithms The news: An open letter from a growing coalition of AI researchers is calling out scientific publisher Springer Nature for a conference paper it reportedly planned to include in its forthcoming book Transactions on Computational Science & Computational Intelligence. The paper, titled “A Deep Neural… Crime […]

Read More

Google think tank’s report on white supremacy says little about YouTube’s role in people driven to extremism

A Google-funded report examines the relationship between white supremacists and the internet, but it makes scant reference—all of it positive—to YouTube, the company’s platform that many experts blame more than any other for driving people to extremism. YouTube’s algorithm has been found to direct users to extreme content, sucking them into violent ideologies.

Read More

Is Facebook Doing Enough To Stop Racial Bias In AI?

After recently announcing Equity and Inclusion teams to investigate racial bias across their platforms, and undergoing a global advertising boycott over alleged racial discrimination, is Facebook doing enough to tackle racial bias? Disinformation driven via bots that game the AI systems of social media platforms to reinforce racial myths and attitudes as well as the […]

Read More

AI biased new media generation

Yeah, great start after sacking human hacks: Microsoft’s AI-powered news portal mixes up photos of women-of-color in article about racism. Blame Reg Bot 9000 for any blunders in this story, by the way News media is being automated and generated by AI which can incur bias from data sets for text generation.

Read More

Twitter image cropping

Another reminder that bias, testing, diversity is needed in machine learning: Twitter’s image-crop AI may favour white men, women’s chests Digital imagery favours white people in framing and de-emphasises the visibility of non-white people. Strange, it didn’t show up during development, says social network

Read More

Google Cloud’s image tagging AI

Google Cloud’s AI recog code ‘biased’ against black people – and more from ML land Including: Yes, that nightmare smart toilet that photographs you mid… er, process Digital imagery tagging provides negative context for non white people.

Read More

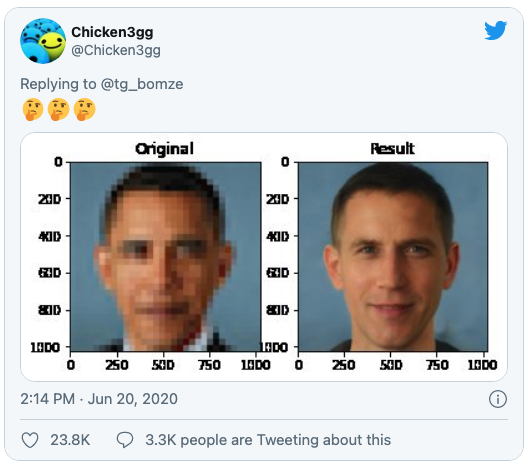

Image processing

Once again, racial biases show up in AI image databases, this time turning Barack Obama white Researchers used a pre-trained off-the-shelf model from Nvidia. Digital imagery tagging provides negative context for non white people.

Read More